Performance

Audio/visual performance

SU will culminate with a audio/visual performance at Art Center Nabi in the last quarter of 2005. The performance will comprise of:

- Multi-screen projection of 3D scaled replication of the “volume” of space that is the precincts of Myoung-Dong (Seoul) and Smith Street (Melbourne). This will be a screen based performance environment to accompany in real time the the SU score.

- Electroacoustic collaborative composition performed as a structured improvisation by Australian and Korean composer/performers.

The performance will take up to one hour to complete after which the SU visual engine will be available for public access within a suitable space at Art Center Nabi.

Collaborative composition

The sound piece, or composition to be performed for SU will take the form of a structured improvisation between Australian and Korean composer/performers.

Taking inspiration from the project rationale, each composer will develop a single movement from 5 - 7.5 minutes in duration. Composers may incorporate sounds contributed to the SU Moblog and/or their own field recordings. Sounds may also be generated from image sources such as sonagrams of traditional instruments and voice. Traditional instrumentation may also be incorporated.

Each performer will communicate via the SU Moblog where ideas, processes, systems and even sounds may be shared. It will be this ongoing communication and pre-production that will largely occur in isolation that will replace the need for traditional rehearsals.

SU Mesh

Designed for screen based improvisation within a customised computer game development engine, the SU visuals will be comprised of a 3D scaled replication of the “volume” of space that is the precincts of Myoung-Dong (Seoul) and Smith Street (Melbourne).

This environment will be appended with a quilt-like pastiche of images and video clips harvested from the SU Moblog. Where possible these assets will be placed within proximity to their source position by way of GPS data collected throughout the project. It will provide the screen artist(s) with a diverse and rich landscape in which to create visual narratives in parrallel with the composer/performers.

SU will utilise the UnrealEngine developed by Epic Games for their Unreal Tournament networked games. It will incorporate the results of 1.5 years of research and development conducted into the use of these tools for interactive screen-based performance, notably John Power and Andrew Garton's Drift Theory at Floating Point, Melbourne, January 2004 and the Son of Science Ensemble performances, Melbourne, February, April, September 2004.

Sample 3D performance incorporating maps (L-R, John Power, Steve Law, Andrew Garton), Son of Science, 2004.

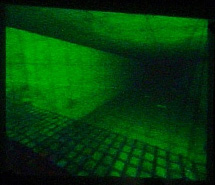

Sample 3D volume, approximately 1km in length, with floating objects each with audio samples assigned to them. (Power, Garton, Floating Point, 2004.)

All weaponry and other game related items will be removed and replaced by visual devices that assist in both the navigation and visual dynamism of the performance. Although the environment will have been pre-produced engagement with it during performances will be entirely unique.

Upon completion of the collaborative performance the engine will be available for installation exhibit at Art Center Nabi.